Collective Action for Fairness

Can people collaborate to make ML fair?

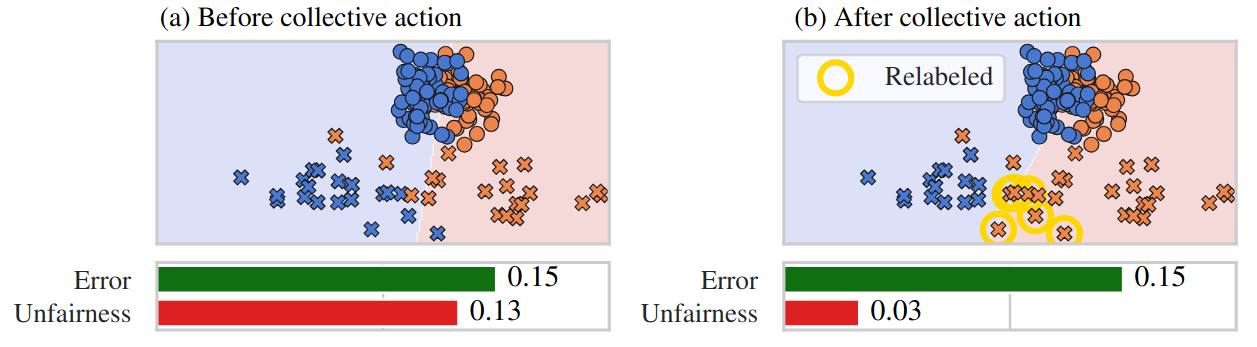

Minority-only collective action can substantially improve fairness. With only 6 label flips, the fairness violation of logistic regression goes down by over 75% with only a negligible increase in prediction error. Circles and crosses represent majority and minority points, respectively.

Introduction

Machine learning often reflects and preserves real-world unfairness. While researchers have proposed many methods to address this, all the solutions have two things in common:

- These methods are designed for the firms that operate the models.

- Reducing unfairness often comes at the cost of increased prediction error.

This creates a paradox: solutions that promote fairness may be ignored by firms because they prioritize minimizing error and maximizing profit.

But in today’s data-driven world, many large tech firms collect their users’ data to train machine learning models. Can these users leverage their agency of the data they share to promote fairness? In this project, we explore how a subgroup of users (particularly from underrepresented minorities) can strategically influence fairness outcomes by managing the data they contribute.

Resources

- For more details, results and limitations, please read our research preprint

- A conference poster presenting the paper.

- A recording of a non-technical talk and the slides from the Tübingen Days of Digital Freedom.

Collaborators

- Samira Samadi

- Amartya Sanyal

- Alexandru Țifrea